By Dr. Jered Borup

Introduction and Background

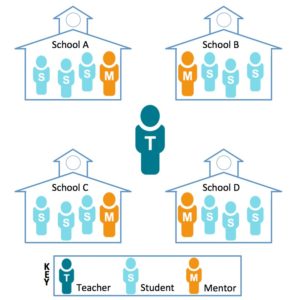

Learning online can be especially challenging for many students because they need to master the content while they simultaneously develop the knowledge and skills required to learn online (Lowes & Lin, 2015). This is especially true for online students who are attempting to recover credit from a class that they failed face-to-face. As a result, online credit recovery students require an especially high level of support and attention. Research has found that the nature of online learning affords teachers the flexibility to provide students with a level of personalized and targeted support that is difficult to provide in face-to-face environments (Velasquez, Graham, & Osguthorpe, 2013). However, it is easier for students to reject the support that their teacher offers when they are not physically present (Borup, Graham, & Drysdale, 2014). As a result, schools are increasingly providing online students with support from on-site mentors, also called facilitators (see Figure 1). In fact, some states, such as Michigan, now require that an employee at the brick-and-mortar school provide online students with mentoring support. Although some schools are required to provide on-site mentoring support, they are allowed to determine what that support looks like. Therefore, the level and kinds of on-site mentoring support can vary greatly between and within brick-and-mortar schools (Freidhoff, Borup, Stimson, & Debruler, 2015). Researchers have also found that online students are more successful when they are provided with a monitored lab and set learning schedule (Roblyer, Davis, Mills, Marshall, & Pape, 2008). However, it is less clear how mentors can effectively support students while they are in the lab.

Typically tutoring students in the content is seen as the purview of the instructor — the content expert — and mentors are charged with ensuring that students stay engaged in the course (Harms, Niederhauser, Davis, Roblyer, & Gilbert, 2006). However, researchers have found that, in practice, mentors commonly answer students’ content related questions—especially when the mentor is certified to teach that subject (Barbour & Mulcahy, 2004; Barbour & Hill, 2011; de la Varre, Keane, & Irvin, 2011; O’Dwyer, Carey, & Kleiman, 2007). Some online teachers welcome mentors’ instructional efforts and others believe that it undermines their role as the online teacher (de la Varre et al., 2011). Researchers at American Institutes for Research (AIR) recently made an important contribution to our understanding of mentors’ instructional efforts by comparing the effectiveness of mentors who provided instructional support to those who did not in the report (see Taylor et al.’s 2016 Research Brief 2: The Role of In-Person Instructional Support for Students Taking Online Credit Recovery). In this blog post, I summarize and critique the AIR report findings.

American Institutes for Research’s Report on Mentoring

The AIR research brief examined ways that Chicago Public Schools’ (CPS) students recovered Algebra IB credit — credit that is required for graduation — the summer after they failed the course in the ninth grade (Taylor et al., 2016). Data was collected for two summers (2011-2012) and included 1,224 students across 17 CPS high schools. Students were randomly assigned to either a face-to-face or an online summer course. In total, there were 38 online courses and 38 face-to-face courses, each with an average of 16 students. Most online and face-to-face students completed 60 hours of seat time over three to four weeks. The primary difference was that face-to-face students attended classrooms and were taught by certified math teachers who were physically present while the online students attended classrooms but received instruction asynchronously using online learning activities that were facilitated by online teachers who were certified to teach math. Students who were enrolled in the online course also received support from an on-site mentor.

Researchers explained that “participating schools selected school staff to serve as the in-class mentors and the in-class mentors were not required to be certified in mathematics” (p. 2). Half (53%) of the mentors were certified to teach high school mathematics, but it was unclear how many of the remaining mentors were certified teachers and how many were school staff.

Mentors’ primary responsibilities were:

- Fulfilling administrative classroom tasks

- Proctoring exams

- Assisting students with technological issues

- Communicating with the online teacher

Mentors were provided with some professional development (PD) but it was unclear what the PD entailed or its quality. While on-site mentors were not asked to provide students with instructional or tutoring support, they were not discouraged from doing so.

For the purpose of this research, mentors were asked to maintain daily logs of their time by recording the amount of time they spent answering students’ questions about mathematics and the amount of time they spent on administrative tasks such as proctoring exams and classroom management.

Researchers then grouped mentors based on the amount of time they reported spending on instructional support. The 15 mentors who reported spending at least 12 hours on instructional support (or at least 20% of the 60 hours of class time) were categorized as “instructionally supportive,” and the 21 mentors who reported spending less than 12 hours on instructional support were categorized as “less-instructionally supportive.” Student demographics and previous performance in math were similar across groups. The exception was school suspension rates in ninth grade: 43% of students with less instructionally supportive mentors were previously suspended from school in grade 9 compared to 34% of students who had more instructionally supportive mentors. Student demographic information was not provided for face-to-face students, and it is unclear what their suspension rates were in grade 9.

Researchers found that online students were less likely to pass their course than face-to-face students (66% vs. 76%) — a finding that is supported by previous research (Freidhoff, 2015; Miron, Gulosino, & Horvitz, 2014). The finding that grabbed the most headlines has been,

Students with instructionally supportive mentors had higher credit recovery rates [77%] than students with less-instructionally supportive mentors [60%], and credit recovery rates were similar to their face-to-face counterparts [76%]. (p. 7)

Interpreting these Findings

The finding that students with instructionally supportive mentors have higher pass rates than students who had less instructionally supportive mentors is interesting but should be understood within the context of this research.

Somewhat contradictory to this finding was that the percentage of possible points earned was similar for students with instructionally supportive mentors (36%) and less instructionally supportive mentors (35%). Comparing means alone can be misleading and a better understanding could have been achieved if researchers also shared the standard deviation from the mean (SD) and graphed the actual grades that students achieved similar to what was provided in their other research brief (Heppen et al., 2016). Student performance on a post-test was also similar for face-to-face students (M=280) and for online students regardless of whether they had an instructionally supportive mentor (M=274) or a less instructionally supportive mentor (M=273).

Comparing final course grades was also problematic because online students’ grades were based on performance and in-class behavior, whereas face-to-face students’ grades were based only on academic performance. As a result, a disruptive online student may have received a lower grade than a face-to-face student who behaved similarly. It is important to remember that mentors who provided less instructional support were working with students who had previously exhibited higher suspension rates in grade 9 (43%) than students who had mentors who provided higher levels of instructional support (34%). It is possible that some mentors provided less instructional support because they were required to spend more of their time on classroom discipline either because the students they mentored had more defiant tendencies or because the mentor was less skilled at classroom management. Regardless of the reason, mentors who experienced a high level of discipline issues may have had little time to provide students with instructional support and may have given their students lower grades as a result of their behavior. Therefore, the differences in pass rates may — in part — actually be a reflection of mentors’ ability to efficiently and effectively manage the classroom and build relationships with students. Additional background information such as mentors’ classroom or mentoring experience and professional development opportunities they’d been provided would have also allowed readers to consider alternative factors that may have had an impact on mentors’ ability to facilitate students’ learning.

Another limitation of this research was that it relied heavily on self-report data from mentors. Within the report, the researchers defined instructional support as the “time they spent answering students’ questions about mathematics — either the content presented in the online course or mathematics topics that are needed to understand Algebra 1” (p. 3). However, the researchers did not report how this definition was communicated to mentors, and it is unclear how well mentors distinguished time spent tutoring students in mathematics and time spent answering questions about specific assignment directions and requirements.

Despite these limitations, this research makes a significant contribution to our understanding of how mentors can influence student performance. The researchers correctly stated that “this brief raises important questions about the role of in-class mentors in supporting students as well as the need for face-to-face instructional support for students to recover credit in key courses required for graduation” (p. 9). More research is needed that addresses the limitations of this research in a variety of course subjects with varying types of students. It may be that instructionally supportive mentors are more important in math than in other content areas such as social studies and language arts. Similarly, instructionally supportive mentors may be more important with non-credit recovery or advanced placement students. This research also examined student performance in summer courses that required students to meet four hours a day for three to four weeks; researchers may find different results in courses where students’ learning is distributed across a more traditional semester.

References

Barbour, M. K., & Hill, J. (2011). What are they doing and how are they doing it? Rural student experiences in virtual schooling. Journal of Distance Education, 25(1). Retrieved from http://www.jofde.ca/index.php/jde/article/view/725/1248

Barbour, M., & Mulcahy, D. (2004). The role of mediating teachers in Newfoundland’s new model of distance education. The Morning Watch, 32(1). Retrieved from http://www.mun.ca/educ/faculty/mwatch/fall4/barbourmulcahy.htm

Borup, J., Graham, C. R., & Drysdale, J. S. (2014). The nature of teacher engagement at an online high school. British Journal of Educational Technology, 45(5), 793–806. doi:10.1111/bjet.12089

de la Varre, C., Keane, J., & Irvin, M. J. (2011). Dual perspectives on the contribution of on-site facilitators to teaching presence in a blended learning environment. Journal of Distance Education, 25(3). Retrieved from http://www.jofde.ca/index.php/jde/article/viewArticle/751/1285

Freidhoff, J. R. (2015). Michigan’s K-12 virtual learning effectiveness report 2013-2014. Lansing, MI: Michigan Virtual Learning Research Institute. Retrieved from https://media.mivu.org/institute/pdf/er_2014.pdf

Freidhoff, J. R., Borup, J., Stimson, R. J., & DeBruler, K. (2015). Documenting and sharing the work of successful on-site mentors. Journal of Online Learning Research, 1(1), 107–128.

Harms, C. M., Niederhauser, D. S., Davis, N. E., Roblyer, M. D., & Gilbert, S. B. (2006). Educating educators for virtual schooling: Communicating roles and responsibilities. The Electronic Journal of Communication, 16(1 & 2). Retrieved from http://www.cios.org/EJCPUBLIC/016/1/01611.HTML

Heppen, J., Allensworth, E., Sorensen, N., Rickles, J., Walters, K., Taylor, S., Michelman, V., & Clements, P. (2016). Getting back on track comparing the effects of online and face-to-face credit recovery in Algebra I. Washington, D.C.: American Institutes for Research. Retrieved from http://www.air.org/sites/default/files/downloads/report/Online-vs-F2F-Credit-Recovery.pdf

Miron, G., Gulosino, C., & Horvitz, B. (2014). Section III: Full time virtual schools. In A. Molnar (Ed.), Virtual schools in the U.S. 2014 (pp. 55–73). Boulder, CO: National Education Policy Center. Retrieved from http://nepc.colorado.edu/files/virtual-2014-all-final.pdf

O’Dwyer, L. M., Carey, R., & Kleiman, G. (2007). A study of the effectiveness of the Louisiana algebra I online course. Journal of Research on Technology in Education, 39(3), 289–306.

Taylor, S., Clements, P., Heppen, J., Rickles, J., Sorensen, N., Walters, K., Allensworth, E., & Michelman, V. (2016). Getting back on track the role of in-person instructional support for students taking online credit recovery. Washington, D.C.: American Institutes for Research. Retrieved from http://www.air.org/system/files/downloads/report/In-Person-Support-Credit-Recovery.pdf

Velasquez, A., Graham, C. R., & Osguthorpe, R. D. (2013). Caring in a technology-mediated online high school context. Distance Education, 34(1), 97–118. doi:10.1080/01587919.2013.770435