Introduction

From kindergarten to high school learning, one of the largest potential factors impacting educational outcomes is how strongly a student is motivated and engaged in interactions with teachers in the classroom (Pianta, Hamre., & Allen, 2012). For instance, children who were identified as at risk of school failure at the kindergarten classroom displayed lower levels of academic outcomes at the end of first grade than their typically developing peers, but this achievement gap can be closed by their first grade teacher’s provision of both instructional and emotional supports (Hamre & Pianta, 2005). In secondary schools, when teaching practices were observed from the three perspectives of emotional support, classroom organization, and instructional supports, this measured quality of teacher-student interactions was predictive of students’ academic achievement on end-of-year standardized tests (Allen, et al., 2013).

Online learning does not differ much in that students benefit from rich opportunities for interactions with their (online course) teachers, which leads to feeling welcome and respected, supported for their needs (e.g., Hawkins, Graham, Sudweeks & Barbour, 2013). However, desirable communication demands, typical communication patterns, and ultimately competencies required of teachers could be unique given the nature of technology-mediated communication and resultant transactional distance (Harms, Niederhauser, Davis, Roblyer, & Gilbert, 2006). Thus, understanding effective communication practices is important for facilitating students learning experience in online courses.

Furthermore, a variety of communication practices have been identified as virtual schooling standards and best practices. It includes sharing student progress with various stakeholders, providing rich opportunities for communication, providing immediate and meaningful feedback, and establishing policies to foster communication at the institution level (Ferdig, Cavanaugh, DiPietro, Black, & Dawson, 2009). Accordingly, a vital need exists to examine those interactivity components in evaluation of success and effectiveness of an entity that offers online courses. The present study was to address aforementioned issues by examining multiple sources of data on communicative interactions between students and online course teachers, and to enrich the existing literature in this line of research.

As stated by one of the participants in Borup, Graham, and Drysdale (2014), knowing how to teach online courses is learning how to communicate. Borup et al’s study identified six categories conceptualizing teacher engagement. It included facilitating discourse, designing and organizing, nurturing, instructing, monitoring, and motivating, and all of those categories contained specific indicators requiring communicative interactions and behaviors to be fulfilled. In communication practices, research also found students’ most preferred format to be asynchronous text communication in comparison with other communication modes, such as synchronous or video conference methods (Murphy, Rodríguez-Manzanares, & Barbour, 2011). As both studies were carried out based on teacher reports, it is worth taking a look at student perspectives on communication practices.

As an attempt to understand student-teacher interactions from the student perspective, Hawkins and colleagues (2013) surveyed students who enrolled in courses offered by a supplemental statewide virtual school. The survey instrument measured student perceptions on the characteristics of communicative interactions with instructor. Findings indicated the three dimensions of communication practices from the student perspective: feedback, procedural interactions, and social interactions. More importantly, the study tested connections between perceived quality and frequency of communicative interactions and course outcomes. Two types of course outcomes were examined: the dichotomous course completion and the 12-point-scale grade awarded. It revealed both outcome variables were positively associated with quality composite scores and the scores of three dimensions of feedback provision, procedural interactions, and social interactions. Based on the interpretation of estimated coefficients’ magnitude, the study concluded that the practical significance of associations of the perceived level and quality of interactions should, nevertheless, be limited to the course completion status, but not the grade awarded. The results on the association between interactions and the grade awarded indicated that in the interaction measure, the increase by the full scale (4-point change) could lead to one unit difference in the grade, for instance, from grade B- to B.

The previous study findings call attention to several points in need of further investigation. Given the skewedness in data of the grade awarded, for instance, 76% of study sample held the grade A- in Hawkins’ study (2013), the grade data, as it is, may be too crude to deliver insights into student learning outcome in online courses. So we need to address how to make the grade data more refined. Second, in addition to the course completion status and the grade awarded, it is worth exploring student satisfaction as an outcome variable given its importance in quality assurance. Furthermore, to the best of the researcher’s knowledge, there is no study wherein actual transaction data has been explored in the K-12 online learning context.

Accordingly, in order to fill those gaps in the extant research, this study addressed three goals as follows:

- Explore students’ end of course (EOC) survey to capture constructs related to satisfaction with course they just took.

- Examine associations between outcome variables, including course grade, engagement, and satisfaction, and student perceptions on communication practices in the course.

- Investigate associations between student communication transactions and student performance in the course.

Methods

There were two study samples: 2017 fall semester sample for the student EOC survey data and 2018 fall semester for the student communication transaction data. In both samples, audit or withdrawn cases were excluded. Additionally, enrollments of advanced placement (AP) statistics course (3 course sections) were removed because the course was offered with the third-party provider, so there were no records on EOC survey and activity log data for the entire course.

The first data source of the study, the EOC survey is most often placed in the final unit folder in Blackboard for students to complete after they finish course activities but before they receive final grades. The survey was 31 questions long. For the present study, we selected 11 questions related to student evaluation of course experience for the first research question and another 10 questions tapping into student perceptions on communicative interactions with the course instructor for the second research question. Selected survey questions will be discussed in the next section in detail.

The final research question was explored with activity log data of messaging tool housed within the online classroom. Learning management (LMS) or student information (SIS) systems offer a solution to enhancing communications in courses. The messaging tool for instance provides users the ability to send and receive one-to-one and one-to-many messages within the LMS. As a result, a database could be constructed to accumulate communicative interactions among teachers and students, such as timestamp of transaction and identification information on communicators. The communication records were exported from Michigan Virtual’s SIS wherein the messaging tool was activated for students’ use. For the study purpose, we used only student outgoing transactions and excluded incoming records, i.e., messages that teachers sent. Lastly, we merged two types of activity log data, records in messaging tool and other LMS data to track learner activity, such as frequency and duration of log-ins and final grades. The final grade was calculated by dividing the total number of points earned by the total point value of all the assignments, and then multiplying the number by 100 percent. Note that due to the volume of communication tracking records, we limited data to all high school courses of English language arts, social studies, mathematics, and science.

Three methods were used to analyze and summarize data. The first study goal was a preliminary inquiry for data reduction using factor analysis. From this, we expected new outcome variable(s) to be formed, reflecting feedback gathered from students. When we were to examine group differences in outcome variables, MANOVA was a major approach as multiple outcome variables that were highly correlated with one another were tested at once. Prior to MANOVA tests, we found that the study data failed to meet assumptions for MANOVA test, for instance, no univariate/multivariate outliers and the multivariate normality from checking upon Shapiro-Wilk W test and boxplots. To address this issue, all outcome variables were processed by logarithmic transformation.

Furthermore, if the design is balanced so that there is an equal number of observations in each cell, the robustness of the MANOVA tests is guaranteed. It turned out that the study sample have unbalanced cell sizes and failed to the homogeneity of covariance matrices from checking upon Box’s M test. Accordingly, we used Pillai’s Trace Criterion, which was robust to violation of homogeneity assumption with unbalanced design, among MANOVA test results. Once we found a significant group difference from MANOVA, t-test and ANOVA were used as ad-hoc tests. Effect size was examined with Cohen’s d and its confidence interval estimated through bootstrap resampling for the t-test case and eta-square for the ANOVA ad-hoc test.

The last part of the report focused on analyses of communication transaction data into heatmaps and a scatterplot. Heatmaps enabled us to represent magnitude (i.e., frequency of transactions) using colors in two-dimensional visualization (e.g., week-by-week across different student groups). With a student grouping variable from decile ranks of final grades, in the study sample, two kinds of heatmaps were made one for week-by-week transaction frequencies across 10 student groups and the total of transactions for individual subject areas (on X-axis) across 10 student groups (on Y-axis). For the second heatmap, i.e., the density by subject areas against performance groups, we normalized the matrix that was entered based on the distribution of values. We noticed that a heatmap without any scaling process was not informative in particular when data included relatively high values that made the other variables with small values look the same, not making distinction between small and smaller values. Normalization could resolve this issue and be done to rows or to columns following study needs. We had the scale argument for the column because we were more interested in comparisons by the subject areas rather than the student performance group for this particular analysis. A scatterplot was used to descriptively evaluate the transaction distribution among individual teachers, that is, on average how many student-outgoing communications a teacher received in a semester period.

Due to the data volume of message transactions, we limited the study sample to core courses, such as all high school courses of English language arts, social studies, mathematics, and science in the fall semester of the 2018-19 academic year (AY).

Results

Construct related to course satisfaction identified from the EOC survey

Before the presentation of results regarding course satisfaction constructs, it is necessary to briefly describe characteristics of respondents to EOC survey in order to better interpret results from analyzing EOC survey data. The study sample comprised 10,102 enrollment records for the fall semester of the 2017-18 AY. Of those enrollments, 13.73% completed EOC survey. It turned out that this respondent group (passing rate=96.71%) was higher performing in comparison with the entire study sample (83.58%) as well as with the non-respondent group (81.76%). Observation on course activity and performance variables also reiterated the gap between the two student groups based on their completion of the EOC survey. The MANOVA test with the total count of LMS logins, the total count of LMS duration in minutes, and the final grade indicated that there is a statistically significant difference in course engagement or outcome levels based on a student’s EOC survey responsiveness with F (3, 10032) = 62.08, p < .001; Pillai’s trace = 0.0182. That is, students who completed EOC survey are more likely to actively engage with course activities and to show greater final grades than their counterparts. As such, in what follows, discussions that relied on EOC survey data will center on perceptions, satisfaction, and experiences with online courses anchored to a particular student group, high performing students.

Eleven questions in the EOC survey were found as measuring two main themes, student perceived quality of course contents and formats and overall satisfaction. This finding was obtained from conducting a way of data reduction, principal component factoring. The two-factor dimensionality was chosen with factor 1 of 4.52 Eigen-value and factor 2 of 1.18. Corresponding proportions suggested that the first factor explained 45% of the total variance and adding the second factor ended up with accounting for 56% of the total variance.

After orthogonal rotation was done, factor loadings became clearer indicating the two-factor structure. The four questions that obviously contributed to factor 1 more than others (factor loadings ranging from 0.68 to 0.83) included:

- Overall, my experience in this MVS course was positive.

- I would take another online course from Michigan Virtual School.

- I would recommend taking an MVS online course to a friend.

- My MVS course helped prepare me to be successful in the future.

The two items below were chosen for factor 1 given that the factor loadings indicated less degree than those for 4 items above but greater than items to load to factor 2 (0.39 vs 0.17 and 0.36 vs. 0.17). These are:

- My class provided me with a challenging curriculum and learning experience.

- My instructor was respectful and caring to me and to other students.

When it comes to the factor 2, five questions’ degree of contribution to defining it was greater than the others. Those are:

- How easy was it to get started in this course?

- My course design and platform were easy to navigate.

- It was clear to me what I was supposed to learn, and I believe that I learned what was intended from the course.

- Directions for each lesson and/or assignment were made very clear.

- The links, media tools, and course content consistently opened, played, and/or functioned properly.

One question asking the overall experience made a considerable contribution to the second component too, but we listed it in the factor 1 based on its loading (0.68) greater than factor 2’s (0.54).

When those two factors are used as variables in modeling or tests, there are two ways to do it: one is using a composite score for each subset of items, and the other is using factor scores estimated based on factor analysis results. Since the first method inevitably loses some information, in particular variance that is attributable to items listed in the other factor, albeit in a less degree, the study used factor scores. See Appendix A for rotated factor loadings for all 11 survey questions.

Based on common themes within both groups of question contents, the first factor was titled “student course satisfaction” whereas the second factor was titled “student perceived course quality.” Taken all together, EOC survey captured satisfaction with and perceived quality of course contents and formats that were reported by students who actively participated in course activities and held high performance in the course.

Communication practice and its associations with outcomes captured by EOC data

The student EOC survey also included 10 questions by which the study could explore student-reported communication practice and its association with outcomes. The first question examined was “When I encountered technical problems, there was adequate support provided to me by…” Responses on 1 (strongly disagree) to 4 (strongly agree) Likert-scale were collected with respect to four types of possible support providers including (a) mentor or another staff member at their school building, (b) online instructor in the course, (c) family member, and (d) classmates at their school building or in online class. The greatest proportion to “Strongly Agree” was found from the category of online course teachers (41.27%). That was followed by school staff members (38.25%), family members (26.13%), and classmates (24.1%). When two positive response categories were combined (i.e., agree and strongly agree), the frequency of positive responses was in the same order: the online course teacher (86.45%), the school member (83.58%), the family member (67.39%), and the classmate (64.23%).

In delving into data focused on online course teachers, the study team again combined four response categories (strongly agree, agree, disagree, strongly disagree) into the two categories of positive (strongly agree, agree) and negative responses (strongly disagree, disagree). This two-level grouping variable allowed the study to test whether students who agreed with online course teachers as technical support providers differed from their counterparts in terms of course engagement, learning outcomes, course satisfaction, and perceived quality of course. MANOVA test found that two groups of students differed overall (F (5, 1278) = 53.8, p < .001; Pillai’s trace = 0.1739). Post-hoc probes with Welch test specified where those differences came from.

There was a significant difference between the positive (agree or strongly agree) and negative (disagree or strongly disagree) responses in terms of final grades (t = -2.35), course satisfaction (t = -8.63), and perceived quality of course (t = -7.44). However, no significant group difference was found from either the login frequency (t = 0.09) or duration (t = 0.7). Accordingly, among the EOC respondent group of students, who were more likely high-achieving students according to the first research question, those who have reportedly sought technical supports from their online course teachers achieved even greater final grades and tended to perceive the course experience more positively.

In addition to being asked about technical supports, students were asked five questions specifically related to communicative interactions with instructors. First, students were asked to choose yes or no to “communication with my teacher as the best part of taking this course,” and “when I started this course, I received a welcome communication from my instructor that clearly explained to me the goals, expectations, policies and procedures, and how to get started.” Students were also surveyed about “receiving important and useful announcements and information posted by my instructor” on 5-response categories including at the beginning and end of the course, every 2-4 weeks, weekly, 2-3 days per week, and daily. On the 1 to 5 Likert-scale (strongly disagree to strongly agree), students also rated their perception on experiences through such questions as “My instructor provided me with specific and timely feedback about my learning and grades, explained things in multiple ways, and provided me strategies to succeed if I struggled in the course.” Lastly, the survey asked about students’ preference for communication methods including Blackboard Messaging Tool, Email, Text, SMS, or Instant Messaging, Cell or Telephone, Video Chat/Video Conference, respectively.

Table 1 presents statistical difference results in terms of five types of outcome variables when we grouped students according to responses to those questions.

| Descriptive Results | Independent Variable | MANOVA Test | Average Post-hoc (t or F statistics)2 |

||||

|---|---|---|---|---|---|---|---|

| Grade | Freq. | Duration | Satisf.4 | Quality4 | |||

| Best part – Communication with teacher1 13.14% (n=1400) |

Dummy Var. No = 0 Yes = 1 |

F (5, 1278) = 8.71*** Pillai’s trace = 0.0329 |

86.71 90.6 n.s.3 |

96.8 110.94 -2.74 |

3403.45 4144.67 -4.06 |

-0.04 0.26 -4.63 |

-0.04 0.26 -4.07 |

| Instructor-Initiated-Communications | |||||||

| Welcome Message No 4.25% Yes 95.75% (n=1284) |

Dummy Var. No=0 Yes=1 |

F (5, 1278)=16.84*** Pillai’s trace=0.0618 |

80.02 88.56 -2.21 |

95.4 98.57 n.s. |

3780.75 3490.78 n.s. |

-0.561 0.03 -3.21 |

-0.66 0.03 -3.98 |

| Announcements 2-3 days 9.88% Begin/End 3.94% Daily 6.8% 3-4 weeks 9.88% Weekly 69.5% (n=1295) |

Dummy Var. At most monthly=0 At least weekly=1 |

F (5, 1278)=11.42*** Pillai’s trace=0.0428 |

85.01 88.70 -2.4 |

96.15 98.8 n.s. |

3429.79 3514.85 n.s. |

-0.32 0.05 |

-0.35 0.06 -4.23 |

| Feedback Strongly Disagree 3.01% 6.8% 17.37% 32.66% Strongly Agree 40.15% (n=1295) |

Category Var. Negative Neutral Positive |

F (10, 2556)=35.75*** Pillai’s trace=0.2454 |

81.5 85.61 89.71 23.45 |

97.02 95.96 99.211 n.s. |

3404.52 3513.63 3513.86 n.s. |

-0.87 -0.39 0.21 96.59 |

-0.77 -0.38 0.19 78.31 |

| Communication Method Preference | |||||||

| Messaging Tool Least 9.98% 4.13% 11.62% 16.38% Most 57.88% (n=1282) |

Category Var. Negative Neutral Positive |

F (10, 2532)=10.57*** Pillai’s trace=0.0802 |

83.20 85.39 89.71 21.84 |

88.49 98.66 100.32 6.55 |

3038.33 3728.39 3557.5 7.39 |

-0.31 -0.07 0.07 11.58 |

-0.44 -0.12 0.1 23.21 |

| Email Least 10.73% 7.02% 16.25% 38.41% Most 27.6% (n=1262) |

Category Var. Negative Neutral Positive |

n.s. | N/A5 | N/A | N/A | N/A | N/A |

| Instant Messaging Least 31.85% 11.73% 30.59% 11.97% Most 13.87% (n=1262) |

Category Var. Negative Neutral Positive |

n.s. | N/A | N/A | N/A | N/A | N/A |

| Phone Call Least 42.29% 32.59% 13.51% 6.76% Most 4.85% (n=1258) |

Category Var. Negative Neutral Positive |

F (10, 2486)=2.35** Pillai’s trace=0.0802 |

89.08 86.46 85.53 5.82 |

n.s. | n.s. | n.s. | 0.04 -0.07 -0.19 3.64 |

| Video Conference Least 74.37% 10.40% 9.37% 3.41% Most 2.46% (n=1260) |

Category Var. Negative Neutral Positive |

n.s. | N/A | N/A | N/A | N/A | N/A |

Note 1. Multiple responses were allowed for (a) Engaging topics, content, and activities; (b) Challenging assignments and learning opportunities, (c) Communication with my teacher, (d) Communication with my classmates, and (e) Flexibility of the online environment.

Note 2. Post-hoc test results are presented with significant t or F statistics at alpha level 0.05 for the five outcome variables of final grade, login frequency, login duration, course satisfaction, and perceived quality of course.

Note 3. n.s. denotes statistically non-significant.

Note 4. For the variables of Satisfaction and Perceived Quality, factor scores were used as descriptive statistics.

Note 5. When a MANOVA test is not significant group difference overall, there is no need for post-hoc tests. NA indicates this case.

Out of five outcome variables, we found significant group differences in terms of all but the final grade when we grouped students based on whether or not they perceived interactions with the instructor as the best part of activities in online courses. That is, a student who held a positive perception on communicating with the teacher in the online course tended to exhibit greater degrees in indicators of course engagement and satisfaction. The response to this survey question however did not differentiate student groups in terms of their final grades.

The survey questioned three types of communicative interactions that could be initiated by teachers–welcome message, announcement, and feedback. Students were asked to report (a) whether the goals, expectations, policies, and procedures for the course were well communicated in the beginning of the course; (b) how often students received important and useful announcements; and (c) whether explicit and timely feedback and supports were provided. With those questions, we divided students into positively reporting students (i.e., yes on welcome message, at least weekly on announcement, (strongly) agree with feedback provision) and counterparts with negative reports. Significant group differences were found in the final grade, course satisfaction, and perceived quality of course. In other words, students’ response to questions around instructor-initiated-communications was predictive of course outcomes and overall satisfaction, but not students’ course engagement levels, such as login frequency and duration.

More specifically, averages of final grades differed by 8.54 points (statistically significant) depending on whether students perceived they had received welcome messages from teachers and by 3.69 points (statistically significant) whether teachers reportedly posted important and useful announcements and information on an at least weekly basis. Furthermore, we found significant differences in levels of satisfaction with and perceived quality of course between the two positively and negatively reporting groups. When students were divided into three groups according to positive, neutral, and negative responses to their teachers’ provision of supports through feedback and additional explanations, those who had agreed upon provisions of those supports achieved better course outcomes and evaluated their course experiences more positively than their counterparts.

The bottom of Table 1 presents results of the five variables that came from the survey questions asking about students’ preferred methods of communicating with their teachers. Types of communication methods included messaging tool inside the online classroom, email, instant messaging, phone call, and video conference and students were asked to rate their preference of respective methods on the Likert-scale from the least preferred to the most preferred.

Descriptive results indicated the messaging tool was the most preferred method given the smallest response rate in choosing least preferred (Video conference=74.37%, Phone Call= 42.29%, Instant Messaging =31.85%, Email=10.73%, and Messaging Tool= 9.98%) and greatest degree in reporting it as the most preferred (Messaging Tool=57.88%, Email=27.6%, Instant Messaging =13.87%, Phone Call=4.85%, Video conference=2.46%). Again the study team divided students into the three groups of positive, neutral, and negative responses and tested statistical group differences according to respondents’ preference of using the messaging tool. From all five types of outcome variables (i.e., final grade, login frequency, login duration, course satisfaction, and perceived quality of course), statistically significant group differences were found with regard to the preferences of the messaging tool. That is, we found that students who preferred using the messaging tool in the online classroom and found it to be a useful communication method engaged more actively in the LMS overall, achieved higher course grades, and had a more positive experience in the course than students who did not.

When it comes to the other three methods–email, instant message, and video conference, the MANOVA test results were not significant and thus there was no need to further test group differences in terms of each of the five outcome variables, suggesting that students’ preference of those communication methods was not predictive of either course grades, engagement level, or course satisfaction. Lastly, we found significant group differences in the final grade and the factor of perceived quality of course according to respondents’ preference of using phone call. Notably students who preferred this method to communicate with their teachers showed lower degrees in both outcome indicators than those that reported it as the less preferred method.

The effect size estimation revealed that the significant differences were not negligible, but substantial for some outcomes. Overall, student perception on receiving welcome communication from the teacher held Cohen’s ds representing effect sizes at medium or high level according to the general rules of thumb (Whitehead, Julious, Cooper, & Campbell, 2016). For this variable, the largest magnitude of effect was found from the final grade (0.73). Also, student perception on technical support from their teachers showed high effect sizes in terms of course satisfaction (1.08) and perceived quality of course (0.71). Eta squared estimation as effect size indicated that both variables, feedback and messaging tool preferred, had effect size on the final grade at medium level according to the general rules of thumb (Miles & Shevlin, 2001). See Appendix B effect size estimates for each of significant factors.

Communication practice and its associations with outcomes captured by actual transaction data

The messaging tool is a solution to enhancing communications in courses and provides users the ability to send and receive one-to-one and one-to-many messages. The second research question of this study suggested that unlike other communication methods such as emails, phone calls, and instant messaging, students’ preference for this tool was more likely to predict desirable outcomes including the final grade, course engagement level, and positive perceptions on course experience. With that in mind, the third research question was posed to see whether that conclusion could be justified by students’ actual transaction data in the message center.

Tracking data on students’ outgoing messages for the fall semester of the 2018-19 AY were targeted. Communication transaction data included timestamps that were transformed into time variables on a weekly basis. Data collection occurred during the semester based on the official school calendar and thus the study sample may not include parts of communicative interactions of students who were granted extensions and interacted with teachers during their extension. Since we structured the timeframe for the study in a way that was based on 7-day interval from Monday to Sunday, the first week, which contained the first transaction, Saturday, August 18, 2018, was at a shorter interval, 2 days. Likewise, at the time of data collection, the final transactions had timestamps of Wednesday, 23-Jan-2019 and thus week 24 had a 3-day interval. See Appendix C for study timeframe with 2018-19 Michigan Virtual Enrollment Calendar for the fall semester.

We also grouped enrollment records by their final grades’ deciles. Table 2 summarizes final grades by the grouping variable.

| 1st | 2nd | 3rd | 4th | 5th | 6th | 7th | 8th | 9th | 10th | |

|---|---|---|---|---|---|---|---|---|---|---|

| n | 482 | 481 | 484 | 478 | 481 | 482 | 481 | 481 | 481 | 481 |

| Average | 97.58 | 95.25 | 93.45 | 91.21 | 88.23 | 84.22 | 78.62 | 69.06 | 44.96 | 7.05 |

| (S.D.) | (0.96) | (0.55) | (0.52) | (0.75) | (1.01) | (1.34) | (2.18) | (3.43) | (13.56) | (5.205) |

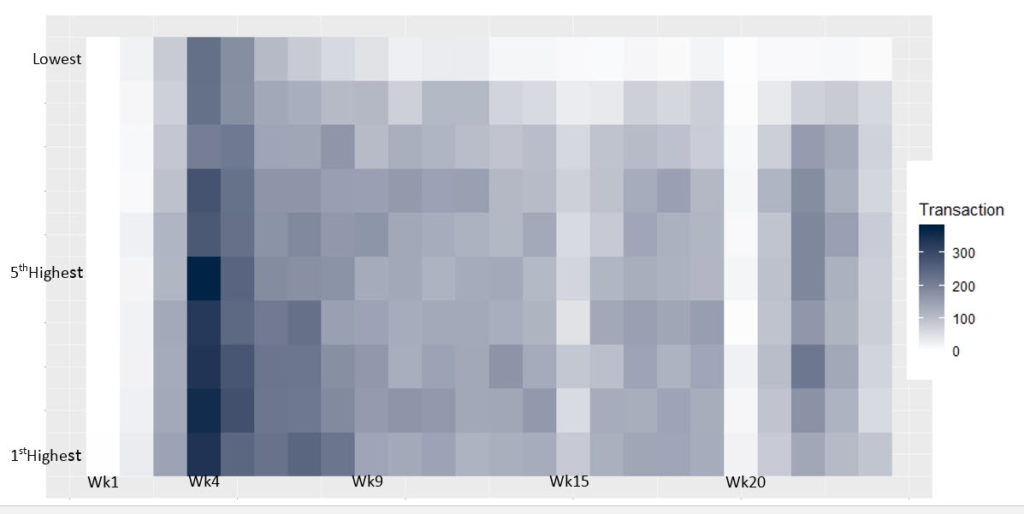

Figure 1 presents the density of week-by-week communicative interactions for each student group from the first highest performance to the lowest performance category. The heatmap displayed a 24 X 10 array of values presenting the frequency of student outgoing communication behaviors with color for respective 10 student groups based on their final grades across 24 weeks.

Overall, the most recognizable feature was that communicative transactions took place predominantly during Week 4 (September 3 to 9, 2018). Up until this week, all the classes of both enrollment schedules (i.e., Semester1 Start 1 on Monday, August 20 and Start 2 on Monday, August 27) began. Accordingly, the students actively engaged in sending out introduction message and responding to welcoming messages and initial course announcements, returning an intensive increase in communicative interactions with their teachers. A majority of messages had the subject, “About me,” or “Re: Introduction (of a teacher)” and some teachers gave students a course assignment, “sending a message to your instructor,” or “introducing yourself.” We often found questions about textbook, and syllabus but also a few communications around specific tasks and learning activities. Yet, detailed content analysis is beyond the scope of this report.

The pattern of the greater level of density from high achieving groups featured in week 4 results. Cells from the five high achieving students looked darker than the other five student groups. Note that the first highest group had the averaged final grade of 97.58 and the fifth highest group had 88.23. Another feature of high achieving groups was that they maintained communicative interactions with teachers to a certain degree for several weeks, for instance, by week 8 (October 1 to 7, 2018).

In the lowest performing group with the averaged final grade of 7.05, transaction frequencies were approaching zero from week 10 (October 15 – 21, 2018). The second lowest performing group demonstrated greater density than the lowest group but the course outcome was the same as the lowest one, with the average final grade that was less than 60 points. Notably sixth to ninth groups share visually similar patterns on the heatmap, but the ninth group did not achieve a final passing grade.

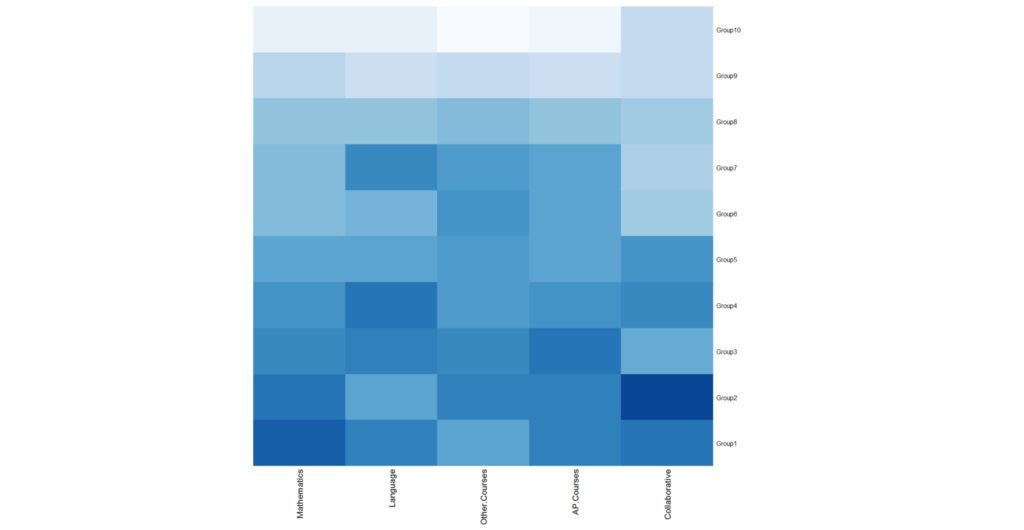

The second heatmap was designed to display different frequencies of communicating actions by the performance group across different subject areas. Each tick on x axis represents the subject areas including mathematics, English (British) language and literature, AP courses, and other courses for science and social studies. Individual categories are not necessarily mutually exclusive and for instance, records from AP statistics courses can be applied to mathematics and AP courses. The category of collaborative is for data from course sections that were not open to the general public, but were for specific school districts only. One noticeable feature of those courses are that partnered school districts had Michigan Virtual course content taught by their own teachers, not Michigan Virtual instructors.

Figure 2 displays distributions of values across subject areas for individual performance groups. In the methodological sense, it is notable that we normalized the matrix that was entered. We noticed that the original heatmap without any scaling process was not often informative in particular when data included relatively high values, for instance, mathematics in our data. This type of high value made the other variables with small values look the same, not making distinction between small and smaller values, for instance, a distinction between Language and Other Courses. Normalization could resolve this issue and be done to rows or to columns following study needs. We had the scale argument for the column (i.e., course types) because we were more interested in comparisons of communications by the subject areas rather than the student performance group for this particular analysis. Accordingly, the best way to read this heatmap is comparing the density across subject areas per each performance group. Note that the chart legend was not included in heatmaps of normalized data.

Overall patterns confirmed the results from the previous heatmap analysis, the higher performing groups exhibit the greater frequency in terms of students’ outgoing communications. The mathematics category could be a good example for this finding given a gradually decreasing density from Group 1 to Group 10. Interestingly, AP courses exhibited the similar pattern but a difference came from those groups in the middle, groups 4 to 7 also had the density to some degree and the greatest density was found from Group 3 rather than the first two high performing groups.

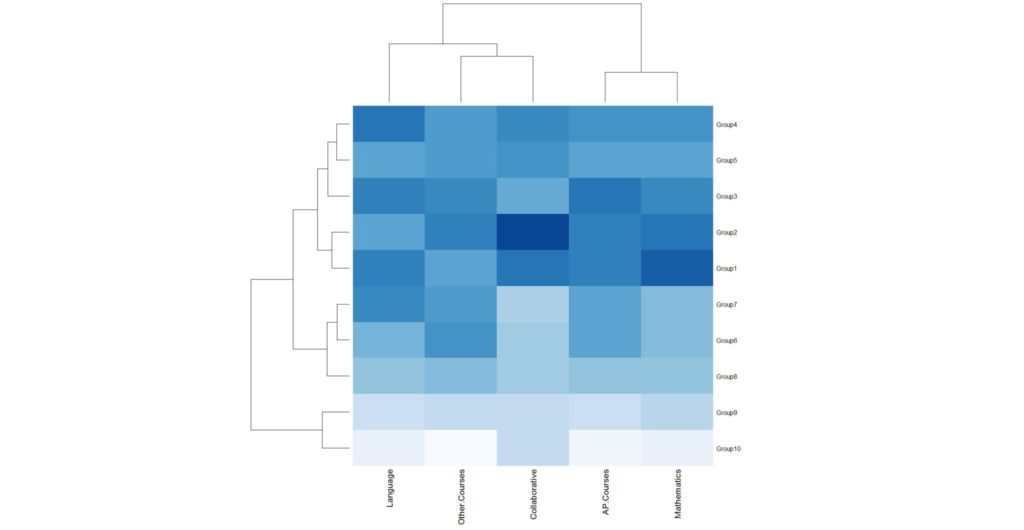

Another way to visually identify patterns is to reorganize cells in a heatmap according to cluster analysis results. The cluster analysis enables us to sort resultant densities across column (subject areas for the present study) and/or row (student performance groups) by joining individual pairs based on their distance (e.g., mathematics and AP courses). A dendrogram, in other words, a hierarchical tree is often used to represent clustering results. Figure 3 presents the results of a cluster analysis. Using the same matrix data and heatmap calculation algorithm that was applied to the Figure 2 heatmap, it was made with permuting the rows and the columns of the matrix based on the similarity of data. As a result, similar values were placed near each other.

The clustering results indicated a cluster joining AP and mathematics courses and another one placing language and other courses including social and science studies near, which shared similar densities of communicative transactions, respectively. What stood out was that the dark cells were primarily found from the student performance groups 1 to 3 in mathematics and AP courses whereas those extended wide from performance group 1 to 7 in languages and “other” courses. When it comes to variations by the student performing group, three clusters could be generated with the first five highest performing groups with, the middle three groups, and finally the two lowest performing groups.

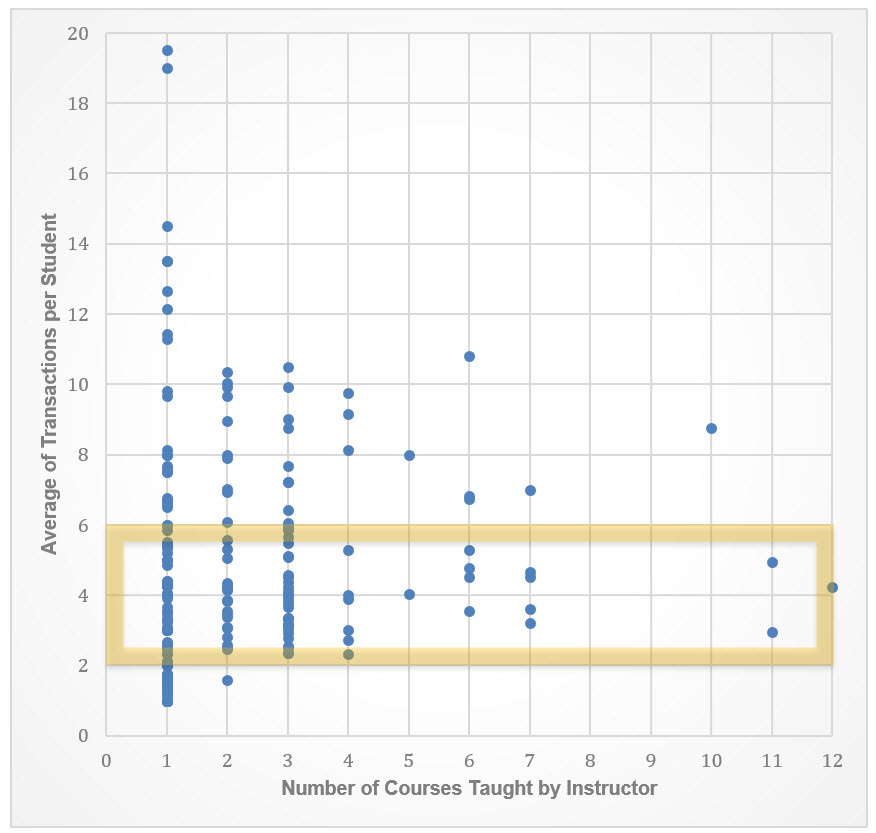

Lastly, distribution of instructors based on the degree in communicative interactions was summarized as follows. The study data involved student-outgoing communications with 208 instructors. Those instructors taught courses ranging from 1 to 12. Among them, 112 instructors taught one course and another 69 taught two or three courses. Figure 4 is a scatter plot presenting the average of transactions per student an instructor was involved in across the numbers of courses individual instructors taught. One data point presents an instructor’s average of student-outgoing messages within his/her caseloads on y-axis and number of courses taught on x-axis.

The distribution of instructor-level averages tended to be wide for those who taught one course. For example, among those who taught more than one course, there is no instructor whose average was greater than 12.Thirty-two instructors had less than two student-outgoing messages on average and the majority of them taught only one course. The average value that occurred most often was in the range of greater than or equal to two and less than four transactions per student (n=72) and the second most frequent case’s range was greater than or equal to four and less than six transactions (n=50). Accordingly, approximately 60% of instructors in the study sample held averaged student-outgoing communications ranging from two to six across different numbers of courses they taught during the fall semester.

Discussion

The purpose of this study was to understand the characteristics of students’ communicative interactions with their instructors in their online courses. To that end, the study explored data of student EOC survey and communication transaction records, and several key findings emerged from the study.

First, students who perceived communications as the best part of learning activities in the online course are more likely to engage in course content and be satisfied with the course experience. The present study also found that course outcomes and satisfaction had a significant relationship with what students perceived in terms of welcome message, course announcement, and feedback provided by their instructor. Although we believed there was no instructor who had not sent a welcome communication at the beginning of the semester, approximately 5% of the study sample reported a negative response to the questions, whose learning outcomes tended to significantly lag behind. In interpreting this finding, it is notable that what those students disagreed about was not simply whether they received a welcome message, but a welcome communication from my instructor that clearly explained to me the goals, expectations, policies and procedures, and how to get started. Accordingly, online course instructors need to assure themselves that those elements are clearly communicated in their welcome message for all students.

When we partitioned survey responses regarding timely feedback and consequent supports into the three groups including positive, neutral, and negative responses, approximately three quarters of the study sample agreed on provision of feedback and subsequent supports. And, significant differences were found in the final grade and the two course satisfaction indicators among three groups. The final grades of the two positive and negative groups differed by 8.21% of the total number of points in a course. Certainly, those findings decisively confirmed the importance of teacher role–providing students with specific and timely feedback and individualized learning supports based on it. Because its effect size in the present study was below the medium level, it bears further discussion.

From twelve meta-analyses that had involved 196 empirical studies, effect sizes of feedback averaged out at 0.79 (Hattie & Timperley, 2007), which is a “large” effect size according to the general rules of thumb (Whitehead, et al., 2016). The current study’s relatively small effect size of feedback would be explainable by that there is a considerable variability of effect sizes across forms of feedback. In other words, some forms of feedback are more powerful to affect student outcomes than others (Hattie & Timperley, 2007). The highest effect size was often found from the task-level feedback, for example, providing cues. Other forms of feedback, such as goal- or evaluation-related feedback and corrective feedback indicated magnitudes ranging from 0.46 to 0.37 at a “medium” level. In particular, the effect of corrective feedback was more powerful when its information was based on students’ correct rather than incorrect responses. In order for student-teacher communication in the form of feedback to be effective, in particular for inefficient or struggling learners, it would be therefore better to proactively provide elaborations and multiple presentations of learning contents, as a form of feed up and/or feed forward (Hattie & Timperley, 2007, p. 86), rather than to wait until feedback on misunderstanding and errors should be provided.

The most noticeable result is the significant group difference in course engagement levels, satisfactions, and outcomes in favor of students who prefer messaging tools as communication methods in the course. With the question about messaging tools, survey responses were divided by the three groups of negative, neutral, and positive responses. In all five dependent variables (i.e., the final grade, login frequency, login duration, satisfaction, and perceived quality of course), statistically significant group differences were found among those three groups. The positive response group (i.e., preferred messaging tools when communicating with the instructor) showed the greatest group averages on the four outcome variables except the login duration. On the login duration variable, the neutral response group held the greatest group average. Accordingly, encouraging students to actively use messaging tools installed in the online classroom would bring out an effective behavior pattern, such as frequently logging into LMS but not staying in it for too prolonged a period.

The last part of the study presented results from analyzing communicative transaction records via the in-house messaging tool, confirming the significance of students’ use of messaging tools. In particular, the pattern–the more student-outgoing messages, the better in the final outcome–was clearly evident in mathematics and AP courses. However, three quarters of instructors in the study sample turned out to receive one to six messages per student. Instructional practices by which students are motivated to use the messaging tool may be needed more frequently.

In concluding this report, it is worth visiting the Student Guide to Online Learning[1]. This guide highlights the importance of communication in spoken and written language. And students are advised to know first how to contact and ask for help from various people involved in their online learning, including course instructor, on-site mentor, technology staff, help desk, parent/guardian, and peers. Contacting online course instructors is among the most viable human resource as a support structure in the guide. In order to provide more specific guidance, we may consider adding communication skills to the extant readiness domains including technology skills, work and study habits, learning style, technology/connectivity, time management, interest/motivation, reading/writing skills, and support services. While reflecting this study’s findings, the rubric assessing the communication element could contain the criteria for “more ready” that student has excellent communication skills and a strong motivation for contacting various people involved in his/her learning, in particular, the online course instructor, and is comfortable with using various communication tools, such as messaging tools in the online classroom.

Reference

Hawkins, A., Graham, C. R., Sudweeks, R. R., & Barbour, M. K. (2013). Academic performance, course completion rates, and student perception of the quality and frequency of interaction in a virtual high school, Distance Education, 34:1, 64-83. Doi http://dx.doi.org/10.1080/01587919.2013.770430

Allen, J., Gregory, A., Mikami, A., Lun, J., Hamre, B., & Pianta, R. (2013). Observations of effective teacher–student interactions in secondary school classrooms: Predicting student achievement with the classroom assessment scoring system—secondary. School Psychology Review, 42, 76.

Borup, J., Graham, C. R., & Drysdale, J. S. (2014). The nature of teacher engagement at an online high school. British Journal of Educational Technology, 45, 793-806.

Bransford, J. D., Brown, A. L., & Cocking, R. R. (Eds.). (1999). How people learn: Brain, mind, experience, and school. Washington, DC: National Academy Press.

Ferdig, R., Cavanaugh, C., DiPietro, M., Black, E., & Dawson, K. (2009). Virtual schooling standards and best practices for teacher education. Journal of Technology and Teacher Education, 17, 479-503.

Hamre, B. K., & Pianta, R. C. (2005). Can instructional and emotional support in the first‐grade classroom make a difference for children at risk of school failure?. Child development, 76(5), 949-967.

Harms, C. M., Niederhauser, D. S., Davis, N. E., Roblyer, M. D., & Gilbert, S. B. (2006). Educating educators for virtual schooling: Communicating roles and responsibilities. Journal of Communication, 16, 17-24.

Hattie, J., & Timperley, H. (2007). The power of feedback. Review of educational research, 77, 81-112. DOI: 10.3102/003465430298487

Miles, J and Shevlin, M (2001) Applying Regression and Correlation: A Guide for Students and Researchers. Sage:London.

Murphy, E., Rodríguez-Manzanares, M. A. & Barbour, M. (2011). Asynchronous and synchronous online teaching: perspectives of Canadian high school distance education teachers. British Journal of Educational Technology, 42, 583–591.

Pianta, R. C., Hamre, B. K., & Allen, J. P. (2012). Teacher-student relationships and engagement: Conceptualizing, measuring, and improving the capacity of classroom interactions. In Handbook of research on student engagement (pp. 365-386). Springer, Boston, MA.

Whitehead, A. L., Julious, S. A., Cooper, C. L., & Campbell, M. J. (2016). Estimating the sample size for a pilot randomised trial to minimise the overall trial sample size for the external pilot and main trial for a continuous outcome variable. Statistical methods in medical research, 25, 1057-1073.

[1] https://michiganvirtual.org/wp-content/uploads/2017/03/studentguide_508.pdf

Appendix A. Rotated Factor Loadings and Unique Variances

| Item | Factor1 | Factor2 | Uniqueness |

|---|---|---|---|

| Easy Start | 0.0816 | 0.7042 | 0.4974 |

| Easy Navigate | 0.2145 | 0.735 | 0.4137 |

| Clear Goal | 0.4533 | 0.666 | 0.3509 |

| Clear Direction | 0.3334 | 0.6909 | 0.4115 |

| Functioning Properly | 0.1168 | 0.6322 | 0.5867 |

| Challenging | 0.3837 | 0.1733 | 0.8228 |

| Caring | 0.3637 | 0.1693 | 0.8391 |

| Overall Satisfaction | 0.6755 | 0.541 | 0.251 |

| Another Course | 0.7939 | 0.1354 | 0.3514 |

| Recommend | 0.8239 | 0.1904 | 0.2849 |

| Future | 0.7752 | 0.1973 | 0.3601 |

Appendix B. Effect Size (Cohen’s d or Eta-square)

| Coefficient (Standard Error) 95% Confidence Interval (Bootstrap replications 200) | |||||

|---|---|---|---|---|---|

| Final Grades | Login Frequency | Login Duration | Satisfaction | Perceived Quality | |

| Technical Support | -0.38 (0.09) -0.56 ~ -0.2 |

-1.08 (0.13) -1.33 ~ -0.84 |

-0.71 (0.1) 7.42 ~ -1.43 |

||

| Best Part – Communication | -0.28 (0.10) -0.47 ~ -0.09 |

-0.32 (0.10) -0.52 ~ -0.13 |

-0.31 (0.07) -0.44 ~ -0.17 |

-0.30 (0.07) 0.45 ~ -0.15 |

|

| Welcome Communication | -0.73 (0.28) -1.27 ~ -0.19 |

-0.59 (0.19) -0.97 ~ -0.21 |

-0.69 (0.17) -1.02 ~-0.36 |

||

| Announcement at Least Weekly | -0.31 (0.11) -0.53 ~ -0.1 |

-0.37 (0.1) -0.57 ~ -0.18 |

-0.41 (0.1) -0.6 ~ -0.21 |

||

| Eta-Square (df) 95% Confidence Interval | |||||

| Feedback | 0.05 (2) 0.03 ~ 0.08 |

0.13 (2) 0.1 ~ 0.16 |

0.11 (2) 0.08 ~ 0.14 |

||

| Messaging Tool Preferred | 0.05 (2) 0.02 ~ 0.07 |

0.006 (2) 0.0002 ~0.02 |

0.007 (2 ) 0.0005~0.02 |

0.02 (2) 0.006~0.03 |

0.04 (2) 0.017 ~ 0.06 |

Appendix C. Time Series in the Study

| Week | Start | End | Michigan Virtual Academic Calendar |

|---|---|---|---|

| Week1 | 18-Aug-18 | 19-Aug-2018 | |

| Week2 | 20-Aug-18 | 26-Aug-2018 | Monday 8/20/18 Semester 1 (20 weeks) Start 1 Trimester 1 (13 weeks) Start 1 |

| Week3 | 27-Aug-18 | 02-Sep-12018 | Monday 8/27/18 Semester 1 (20 weeks) Start 2 Trimester 1 (13 weeks) Start 2 |

| Week4 | 3-Sep-18 | 09-Sep-12018 | Tuesday 9/4/18 Trimester 1 (13 weeks) Start 3 |

| Week5 | 10-Sep-18 | 16-Sep-12018 | |

| Week6 | 17-Sep-18 | 23-Sep-12018 | |

| Week7 | 24-Sep-18 | 30-Sep-12018 | |

| Week8 | 1-Oct-18 | 07-Oct-2018 | |

| Week9 | 8-Oct-18 | 14-Oct-2018 | |

| Week10 | 15-Oct-18 | 21-Oct-2018 | |

| Week11 | 22-Oct-2018 | 28-Oct-2018 | |

| Week12 | 29-Oct-2018 | 04-Nov-2018 | |

| Week13 | 05-Nov-2018 | 11-Nov-2018 | |

| Week14 | 12-Nov-2018 | 18-Nov-2018 | Sunday 11/18/18 Trimester 1 (13 weeks) End 1 |

| Week15 | 19-Nov-2018 | 25-Nov-2018 | Monday 11/19/18 Trimester 2 (14 weeks) Start 1 Sunday 11/25/18 Trimester 1 (13 weeks) End 2 |

| Week16 | 26-Nov-18 | 02-Dec-2018 | Monday 11/26/18 Trimester 2 (14 weeks) Start 2 |

| Week17 | 03-Dec-2018 | 09-Dec-018 | Monday 12/3/18 Trimester 1 (13 weeks) End 3 Monday 12/3/18 Trimester 2 (14 weeks) Start 3 |

| Week18 | 10-Dec-2018 | 16-Dec-2018 | |

| Week19 | 17-Dec-2018 | 23-Dec-2018 | |

| Week20 | 24-Dec-2018 | 30-Dec-2018 | |

| Week21 | 31-Dec-2018 | 06-Jan-2019 | Sunday 1/6/19 Semester 1 (20 weeks) End 1 |

| Week22 | 07-Jan-2019 | 13-Jan-2019 | Sunday 1/13/19 Semester 1 (20 weeks) End 2 |

| Week23 | 14-Jan-2019 | 20-Jan-2019 | |

| Week24 | 21-Jan-2019 | 23-Jan-2019 |